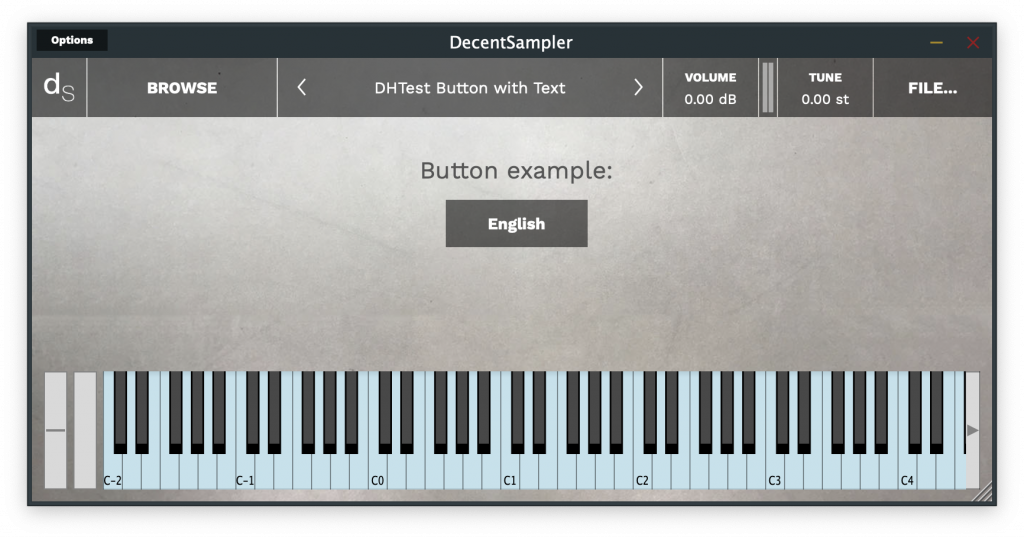

As for version 1.5.24 of Decent Sampler, it is now possible to make use of LFOs and ADSR envelopes in your Decent Sampler sample libraries. In this blog post, we’ll go through how to set these up.

The <modulators> section

This is a new section that lives below the top-level <DecentSampler> element and it is where all modulators for the entire sample library live:

<DecentSampler>

<modulators>

<!-- Your modulators go here. -->

</modulators>

</DecentSampler>

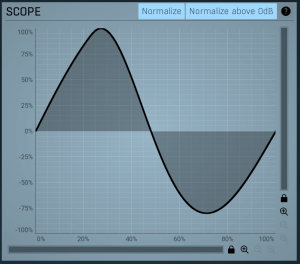

The <lfo> element

Underneath the <modulators> section, you can have any number of different LFOs, which are defined using an <lfo> element, for example:

<modulators>1

<lfo shape="sine" frequency="2" modAmount="1.0"></lfo>

</modulators>

This element has the following attributes:

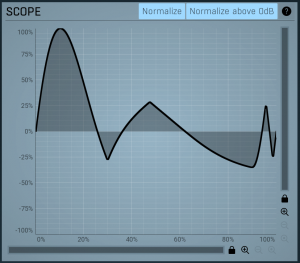

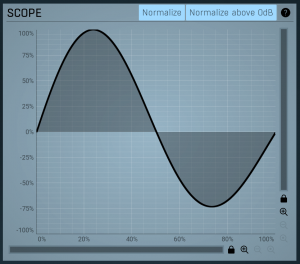

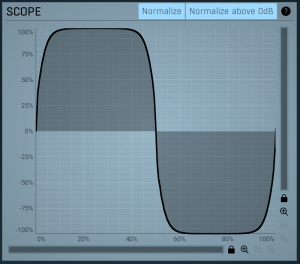

shape: controls the oscillator shape. Possible values are sine, square, saw.frequency: The speed of the LFO in cycles per second. For example, a value of 10 would mean that the waveform repeats ten times per second.modAmount: This value between 0 and 1 controls how much the modulation affects the things it is targeting. In conventional terms, this is like the modulation depth. Default value: 1.0.scope: Whether or not this LFO exists for all notes or whether each keypress gets its own LFO. Possible values are global (default for LFOs) and voice. If voice is chosen, a new LFO is started each time a new note is pressed.

The <envelope> element

In addition to LFOs, you can also have additional ADSR envelopes. These can be useful for controlling group-level effects, such as low-pass filters. If this is what you wish to achieve, make sure you check out the section on group-level effects below.

To create an envelope, use an <envelope> element:

This element has the following attributes:

attack: The length in seconds of the attack portion of the ADSR envelopedecay: The length in seconds of the decay portion of the ADSR envelopesustain: The height of the sustain portion of the ADSR envelope. This is expressed as a value between 0 and 1.release: The length in seconds of the release portion of the ADSR envelopemodAmount: This value between 0 and 1 controls how much the modulation affects the things it is targeting. In conventional terms, this is like the modulation depth. Default value: 1.0.scope: Whether or not this LFO exists for all notes or whether each keypress gets its own LFO. Possible values are global and voice (default for envelopes). If voice is chosen, a new LFO is started each time a new note is pressed.

How to use bindings in conjunction with modulators

In order to actually have your LFOs and envelopes do anything, you need to have bindings under them. If you are not familiar with the concept of bindings, you may want to read this section of the File Format Reference Guide and then return here. Bindings tell the engine which parameters the LFO should be affecting and how. Here is an example:

<modulators>

<lfo shape="sine" frequency="2" modAmount="1.0">

<!-- This binding modifies the frequency of a low-pass filter -->

<binding type="effect" level="instrument" effectIndex="0" parameter="FX_FILTER_FREQUENCY" modBehavior="add" translation="linear" translationOutputMin="0" translationOutputMax="2000.0" />

</lfo>

</modulators>

This is Example 1 from the example pack you can download here.

How modulator bindings differ from knob or MIDI bindings

If you’re already familiar with the concept of bindings, then you’ll want to read this section especially careful as you may notice a few difference between bindings as they are used by knobs and the ones used by modulators. Specifically, when you move a UI control that has a binding attached, the engine actually goes out and changes the value of the parameter that is targeted by that binding. For example, if you have a knob that controls a lowpass filter’s cutoff frequency, moving that knob will cause that actual frequency of that filter to change. In other words, the changes that the knob is making on the underlying sample library are permanent. The same is also true for bindings associated with MIDI continuous controllers.

Modulators, on the other hand, do not work this way. If a modulator (such as an LFO) changes its value, the engine looks at the bindings associated with that LFO and then makes a list of temporary changes to the underlying data. When it comes time to render out the effect, it consults both the permanent value and the temporary modulation values. As a result of this difference in the way bindings are handled, only some parameters are “modulatable.” At time of press, the following parameters are modulatable:

- All gain effect parameters

- All delay effect parameters

- All phaser effect parameters

- All filter effect parameters

- All reverb effect parameters

- All chorus effect parameters

- Group Volume

- Global Volume

- Group Pan

- Global Pan

- Group Tuning

- Global Tuning

The new modBehavior parameter for bindings

Another new feature of bindings is the addition of the modBehavior attribute. This controls exactly what effect a binding actually has on the parameter it is targeting. There are three possible values for this:

set: This means that the value that is generated by the binding becomes the new value for the parameter being targeted. NOTE: set is the default value and this is the way that knobs and MIDI CC bindings work by default. That being said, it’s usually not the correct choice for modulations such as LFOs and secondary ADSR envelopes.add: The value generated by the binding gets added to the current value of the parameter being targeted.multiply: The value generated by the binding gets multiplied with the current value of the parameter being targeted.

In order to understand what any of this means, let’s look at the following example:

<effects>

<effect type="lowpass" frequency="60.0"/>

</effects>

<modulators>

<lfo shape="sine" frequency="2" modAmount="1.0">

<binding type="effect" level="instrument" position="0" parameter="FX_FILTER_FREQUENCY" translation="linear" translationOutputMin="0" translationOutputMax="2000.0" modBehavior="add" />

</lfo>

</modulators>

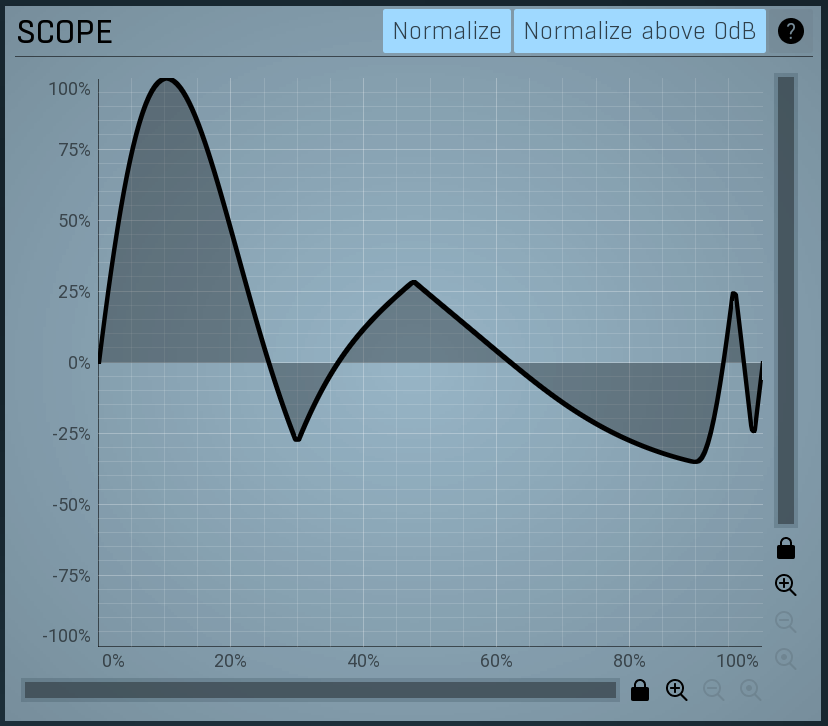

The <lfo> tag above sets up an LFO with a frequency of 2 Hz. It has just one binding, which targets the first global effect, which happens to be a low-pass filter with a cutoff frequency of 60Hz. Every binding can be seen as a pipe that takes an input value, translates that value in some fashion, and then sets a parameter somewhere else in the engine. Here are the steps for this setup:

- By default, an LFO generates values between -1.0 and 1.0.

- These values then get passed to the binding, which is setup to do a linear translation. This linear translation has a minimum of 0 and maximum of 2000, which means that when the LFO is at its lowest point (-1.0) the binding will generate the number 0 (the minimum) and when the LFO is at its highest point (1.0), the binding will generate the number 2000 (the maximum).

- Because the

modBehavior value is add, this new value that is generated by the binding will be added to the original cutoff value of 60Hz. This means that when the LFO is at its lowest point, the filter cutoff will be 60Hz (i.e. 60 + 0) and when its at its highest point, the filter cutoff will be 2060Hz (i.e. 60 + 2000).

LFO Scope: Global or Voice-level

By default, all modulators will be created at the global level. This means that there will be exactly one modulator that is shared by all voices. In many situations, such as an LFO modulating a single low-pass filter which is shared by all of voices, this is often what we want.

But there are other situations where we don’t want our modulator to be global. For example, what if we want to have an envelope that targets a low-pass filter. Let’s say that when we press down on a key, we want that low-pass filter to open up slowly until, 2 seconds later, it reaches its peak. In theory, we could set up something like this:

<effects>

<effect type="lowpass" frequency="60.0"/>

</effects>

<modulators>

<envelope attack="2" decay="0" sustain="1" release="0.5" modAmount="1.0">

<binding type="effect" level="instrument" position="0" parameter="FX_FILTER_FREQUENCY" translation="linear" translationOutputMin="0" translationOutputMax="4000.0" modBehavior="add" />

</envelope>

</modulators>

But there’s a problem with this: let’s imagine that we hit a note, and then one second into that first note, we hit another note. If we have just a single envelope, that envelope will be half-way through its attack phase when the second key is pressed. Depending on how the envelope is configured, that envelope will either retrigger because of the new keypress (this would be the wrong behavior for the first note which is still being held) or keep going in which case the second note will start half way through its attack phase.

To solve this problem, in such cases, we need tell the engine to create a separate modulator for every keypress. To do this we add a scope="voice" attribute to the modulator as follows:

<envelope attack="2" decay="0" sustain="1" release="0.5" modAmount="1.0" scope="voice">

But wait, there’s another problem! In the scenario above, even if we have separate modulator for every keypress, those voices are still all sharing a single global low-pass filter. If you’ve got several modulators pinned to the same global effect, they are going to be setting and resetting that global effect’s parameters to competing values. The engine is going to be at war with itself of which envelope’s values are the correct setting for the filter’s cutoff. In other words, we need separate effects for each keypress. These can be added by specifying…

…effects at the group level

Adding effects that only apply to a specific group is easy. All you need to do is create an <effects> group that lives underneath the <group> element for the group you want to affect. For example:

<groups>

<group>

<!-- A sample -->

<sample path="Samples/Volca Keys Poly-V127-60-C3.wav" loNote="10" hiNote="83" rootNote="48"/>

<effects>

<!-- These effects will only apply to this group -->

<effect type="lowpass" frequency="22000.0"/>

</effects>

</group>

</groups>

Group level effects are initialized every time a note is started and destroyed every time a note is stopped. If you play two notes simultaneously, two instances of this effect will be created and these will be independent of eachother. As a result, they use more CPU than global effects.

NOTE: Only certain effects will work as group-level effects: lowpass filter, hipass filter, bandpass filter, gain, and chorus. Delay and reverb cannot work properly as they will be deleted before their tail peters out.

How to have knobs control group-level effects

Just as it is possible to have knobs that control instrument-level effects, it is also possible to have them control group level effects. In order to specify a group level effect, set the binding’s level to group, and use groupIndex and effectIndex to specify which specific effect needs to be controlled. Here is an example of this a knob that controls a group-level low-pass filter:

<labeled-knob x="655" y="75" label="Tone" type="float" minValue="0" maxValue="1" value="1">

<binding type="effect" level="group" groupIndex="0" effectIndex="0" parameter="FX_FILTER_FREQUENCY" translation="table" translationTable="0,33;0.3,150;0.4,450;0.5,1100;0.7,4100;0.9,11000;1.0001,22000"/>

</labeled-knob>

Putting it all together: an envelope filter that controls a low-pass filter

So, if we put all of this into a real world example, we can code imagine an ADSR envelope that is controlling a low-pass filter as follows:

<?xml version="1.0" encoding="UTF-8"?>

<DecentSampler minVersion="1.6.0">

<groups>

<group ampVelTrack="0.0">

<sample path="Samples/Volca Keys Poly-V127-60-C3.wav" loNote="10" hiNote="83" rootNote="48"/>

<effects>

<effect type="lowpass" frequency="22000.0"/>

</effects>

</group>

</groups>

<modulators>

<envelope attack="1" decay="0.5" sustain="0" release="0.5" modAmount="1" scope="voice">

<binding type="effect" level="group" groupIndex="0" effectIndex="0" parameter="FX_FILTER_FREQUENCY" modBehavior="set" translation="table" translationTable="0,33;0.3,150;0.4,450;0.5,1100;0.7,4100;0.9,11000;1.0001,22000" />

</envelope>

</modulators>

</DecentSampler>

This is Example 2 from the example pack.

How to make knobs control modulator parameters

Both types of modulators – <lfo> and <envelope> – have a modAmount parameter. This consists of a value between 0 and 1 that dictates how much the modulation affects the things it is targeting. In conventional terms, this is like the modulation depth. In order to create a knob that controls LFO depth, you would create a knob that targets a modulator’s depth. Here’s how you would do this:

<labeled-knob x="585" y="75" label="LFO Depth" type="float" minValue="0.0" maxValue="1" value="1" >

<binding level="instrument" type="modulator" position="0" parameter="MOD_AMOUNT" />

</labeled-knob>

Note the binding type value of modulator and a position of 0. In other words, we are targeting the first modulator in the modulator block. The parameter we are targeting is MOD_AMOUNT.

It is similarly possible to target LFO rate using a parameter value of FREQUENCY:

<labeled-knob x="655" y="75" label="LFO Rate" type="float" minValue="0.0" maxValue="10" value="1">

<binding level="instrument" type="modulator" position="0" parameter="FREQUENCY" />

</labeled-knob>Code language: HTML, XML (xml)

You can see a full example of a patch that controls rate and depth in Example 3 of the example pack.

Conclusion

That’s pretty much it. We look forward to seeing what you do with LFOs and envelopes. Make sure you download the example pack from here.

The example pack contains the following examples:

- Example 1 shows how to make an LFO that controls a global lowpass filter

- Example 2 shows how to have a voice-level envelope which controls a group-level filter

- Example 3 shows how to have knobs control LFO parameters

- Example 4 shows how to have knobs control envelope parameters

- Example 5 shows how to modulate group volumes

- Example 6 shows how to modulate group panning

- Example 7 shows how to modulate group tuning